How publishers should use The SEO Web Almanac

The things I found interesting from the 2024 Web Almanac Review (and you should too!)

What is the SEO Web Almanac?

If I have to tell you what the SEO Web Almanac is I’m going to have to ask whether you have opposable thumbs. Is your partner just happy that you aren’t flicking shit at the walls and picking your own fleas?

From the horses’ mouth:

“The Web Almanac’s SEO chapter focuses on the critical elements and configurations influencing a website’s organic search visibility. The primary goal is to provide actionable insights that empower website owners to enhance their sites’ crawlability, indexability, and overall search engine rankings...”

It combines data from HTTP Archive, Lighthouse, Chrome User Experience Report, and custom metrics to document the state of SEO across the web.

It’s worth noting that they only analyse each site's homepage and one inner page. While that may seem limiting, we are talking about millions of websites, on both mobile and desktop.

It is unequivocally the most comprehensive SEO web analysis out there.

What does it cover?

A lot. It's probably a little too much on word count this time for my liking, but there are some semi-precious stones in here. Broadly;

Crawlability and indexation

Canonicalisation

Page experience

On-page SEO

Links

Word count

Structured data

AMP

Internationalisation

The six things I found the most interesting for publishers

All of these are things you should double check you’re not doing.

Crawlability and indexation

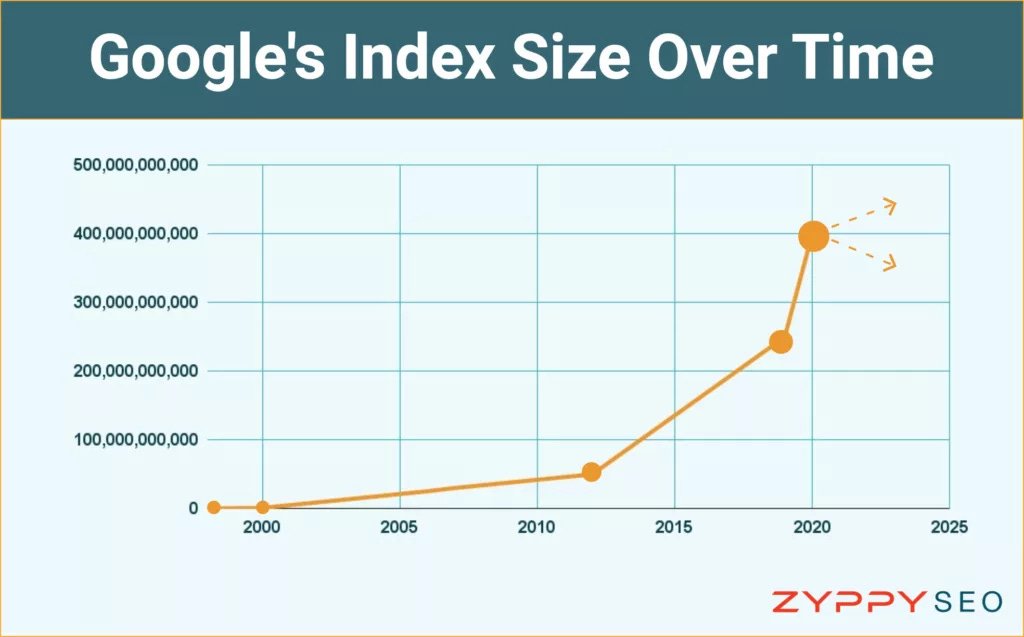

In 2022, Bing shared that its crawler discovered nearly 70 billion new pages daily. However, during this year’s antitrust suit against Google, it was revealed that its index contains only around 400 billion documents.

That means far more pages are crawled than indexed. As publishers who pride themselves on content production, I think this is something to be aware of, particularly for smaller publishers. Make sure your content adds value.

Every day Google checks the robots.txt of around four billion host names according to Gary Illyes.

No wonder they need the money.

The web is bigger than ever. Web pages are bulkier, more metadata is stored, multimedia is richer and electricity costs are higher than ever. Companies this greedy for money don’t like spending it and I’m sure a good proportion of this index adds very little value.

This is just the start of shitty ads in organic listings and AIOs telling me to eat glue. Interesting that Google has spent so much time and effort trying to combat shit content and proliferates its own SERPs with it…

I wouldn’t be surprised if Google started knocking on doors for money over the coming years. Door to door ad sales from the world’s most expensively created and poorly communicated search engine.

Fast becoming a secular brand of the Mormon church. If you see Sundar Pichai coming down the street with a double glazing brochure don’t say I didn’t warn you. Reddit sponsored windows incoming.

IndexIfEmbedded tag

If you’re a big publisher, chances are you will use a lot of iFrames. I’m mildly ashamed to say we certainly do. But let him without sin and all that.

In January 2022, Google introduced a new robots tag - IndexIfEmbedded. The tag is placed in the HTTP header (helping the client, like a browser, and server communicate effectively), providing indexing control for resources used to build a page.

A common use case for this tag is to control indexation when third party content is in an iframe. Even when a noindex tag has been applied.

The 7.6% of pages with an iFrame in 2024 represent an 85% increase from 2022. Which in itself is interesting. Despite this technology being seen as second rate, its simplicity to use means the pros still outweigh the cons.

It is generally a bad thing for SEOs that content produced by a brand is not considered part of the brand’s web page when it is embedded. The indexation and speed negatives associated with iFrames haven’t gone away.

Google and John Mueller have spoken at length about why iFrames are not the ideal solution to your problem. Content within them just isn’t guaranteed to be indexed. It’s something we’ve seen time and time again across thousands of pages.

The BBC moved to ShadowDom a few years ago and seem to have seen very positive results. Faster pages and more efficient indexation.

How Google REALLY ranks news sites

“Just because Google patented something doesn’t mean it has been or continues to be used in its algorithm.”

Invalid <head> elements

This is one of my favourites and something to be aware of as a technical SEO. The <head> of your page is where you store some of the most vital information. We’re talking the title tag, canonical tag, OG tags and structured data (if you use JSON-LD).

The whole nine, pitifully nerdy yards.

However, when search engines encounter an invalid element in the head of the page, it ends and it assumes the <body> of the page begins. Given the head of the page is where some of the most important information is stored, the impact of this can be huge.

Particularly for news sites and publishers.

I’ve written at length about Top Stories and how it works. Or doesn’t, depending on your point of view. Being precise is crucial. As is sending accurate, timely information. And the head of your page gives you the ability to control what Google sees in a split second.

If the head ends before you can serve all the prescient information, you’ve done what we in the industry would describe as a shit job.

2024 saw an improvement of around 12% when it comes to broken HTML in the head. Not bad, but still room for improvement.

The attributes that are acceptable in the <head> of the page are;

title

meta

link

script

style

base

noscript

template

Here you can see the most commonly found invalid HTML elements are <img>, <div> and <a> tags. If you do nothing else, I suggest you review each template on your site to make sure none of these elements are present.

To do this properly, check the rendered html of the page so you don’t miss elements dynamically inserted by Javascript. You can, of course, just use a crawler that has this functionality, like Screaming Frog.

But a little real time testing never hurt anyone. You have three good options;

The URL Inspection Tool

The ‘inspect’ element in Chrome

The View Rendered Source plugin

Copy the head and inspect it. Use third party tools like ChatGPT to help.

Page experience

I’m convinced that Core Web Vitals have been so successful because they have tangible metrics we can assign to them. It certainly makes getting things pushed through prioritisation and refinement sessions easier.

A rare thank you to Google from me. Although it has inflated the importance of this IMO. Once you reach a certain level, you’re fine. Don’t neglect other issues.

Video

Guess what % of pages have video as of 2024?

5%. Isn’t that shit? Is it?

The more I think about it, the more I think it isn’t that shit. But good quality video is still a vastly under-utilised format for a few reasons. Yes, having a good quality video adds immense value to a page.

Better rankings, better traffic, blah, blah, blah.

But that’s fairly binary* thinking. Video can be cut and served across multiple channels. it humanises your brand. It brings life to our otherwise pitiful existence.

The question you should be asking is does our content stand out? Is it memorable? Would our brand be improved with more visually appealing content?

*I recently started learning Python and found out how binary code works. Something I just assumed was basically nonsense is, in fact, not that complicated. Entertain me for a second…

You’ve just learnt binary code. You’re welcome.

Structured data

Structured data. I’m a bloody huge fan of structured data and I’ll tell you why. When done well it removes any uncertainty. That’s a huge thing for news sites, search engines and, I’ll wager, for LLMs.

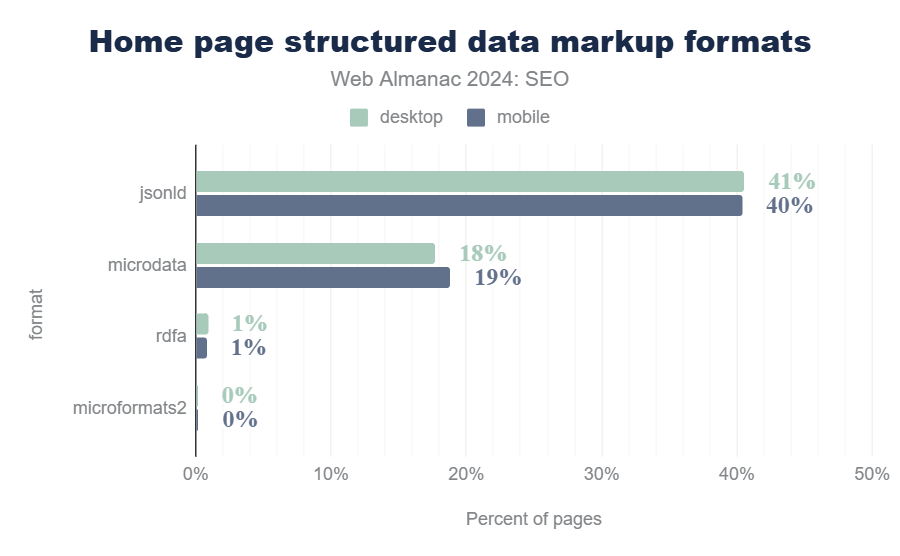

Let’s take a homepage like The Telegraph. We recently moved from microdata to JSON-LD. Not a project I have a particularly fond memory of, but we were trying to future-proof our site and make markup much easier to update.

Unpicking microdata is a lot less simple than updating JSON-LD because it nests metadata in the HTML. And JSON has the benefit of being Google’s preferred implementation of schema.

So a win-win.

And when implemented properly, you connect the dots for search engines and crawlers in the most effective way possible. You help create a strong, connected brand.

You can use the Organization Schema to connect other entities like The Sunday Telegraph and The Daily Telegraph with the SameAs property. Add in founding dates, founder, addresses, awards, parent and sub-organisations. You can add an unparalleled level of detail and accuracy to each page.

There’s a reason Google likes brands. It’s the same reason almost 45% of all Google searches in the US were branded. They’re trustworthy. For people too.

You just need to look at the work Mark Williams Cook and the team did, uncovering the site and consensus scores Google uses when choosing sites to rank in rich features.

Google assigns site quality scores (from 0 - 1) to each domain and subdomain

Unless your score is above 0.4, you cannot rank for rich features

Google determines reliability through a consensus score, ranking content that aligns with widely accepted truths higher (the opposite is also true)

It is well worth a watch.

And this brilliant article by Gianluca Fiorelli shows how important technical SEO can be for improving your brand’s trustworthiness.

Google uses engagement metrics to help rank content more effectively. This isn’t new. But our bias towards recognised brands means that CTR for something Nike is going to be much higher than a smaller competitor. A significant part of Navboost.

You need to think about structured data outside of rich results. It is a way of labelling your content that helps search engines and LLMs understand who and what you are.

Inner page structured data

Just when you thought we were done with structured data… I and the beautiful Web Almanac team bring you inner page markup.

Nearly 30% of sites misuse schema markup for their inner pages.

The third most popular schema type for ‘inner’ pages is Website. The Web Almanac team do point out that that’s likely a templating mistake. But it’s at best unfortunate and at worst fairly stupid and lazy.

Schema is a great opportunity to provide real clarity about your brand and the page in question. So connect the dots better with proper markup;

ListItem

BreadcrumbList

Person

Article / Blogpost

Particularly if you’re a news brand who cares about Top Stories.

What next

So, make sure you check the head of your page for errors, check your structured data is sending accurate, clear signals to search engines and LLMs, review your iFrame usage (and tagging) and for the love of god create more engaging content.