Crawl budget management for publishers and large sites

Tangible and proven ways you can create a more efficient site in the eyes of our glorious Googlebot overlord

TL;DR

Crawl budget represents the resource Googlebot allocates to crawling your site

An optimised crawl budget prevents resource wastage on broken links, redirect chains, or low-value pages

Crawl budget is finite. The more dead space there is - broken links, redirect chains, inefficient resource loading - the less time bots spend on what matters

Improve site speed, reduce server delays, enhance internal linking, and eliminate deadweight pages for maximum efficiency. Consider techniques like caching static assets, addressing 304 response codes for archived content, and streamlining navigation are key strategies for publishers

Understanding crawl budget

Crawl budget is an SEO industry term that describes the amount of resource a bot (ok, Googlebot, who are we kidding) will spend crawling your site. Typically, bots enter through your most powerful pages and follow a network of links to discover new and updated content alongside website changes that may influence the overall quality of your site.

Why it matters

It matters because Googlebot has a finite resource and the web is expanding at a crazy rate. And, quelle surprise, the quality of everything is not worth storing.

Once Googlebot enters your site, you want to do all you can to direct it to the most important areas of your site. Areas that drive value to the business. As a news publisher, would you rather Google spent its time crawling 120 word news articles from 2005? Or your most up to date and theoretically highest quality articles?

Exactly.

Crawl budgets are a pretty good way of evaluating your site's efficiency. Identifying leakages—internal links to broken pages, redirect chains, and site speed issues—that cause crawlers to waste resources and leave your site before their time is up is never a good thing.

Treat bots like a hyperactive child. You know they’re going to wear themselves out and knacker themselves out eventually. So when you’ve got them, try to get them to focus on something productive. If these three sentences haven’t made it obvious, I don’t have children. These bots are my children.

How Google REALLY ranks news sites

“Just because Google patented something doesn’t mean it has been or continues to be used in its algorithm.”

What factors affect a website’s crawl budget?

As a publisher, the bigger and more powerful your site, the larger your crawl budget. By powerful I mean; the number of backlinks and mentions you have gained over the years, your backlink and publishing velocity and (presumably) quality user engagement signals are probably the biggest factors.

Ultimately, the impact you have as a brand will be rewarded. Google trusts brands, particularly brands that users return to. So build one and they will come.

How can I optimise it?

By making your site more efficient. Optimising your internal linking structure, removing deadweight pages, minimising errors and redirect chains and improving the speed of your site all have an immensely positive impact. The harder you make bots work, the less of your site they will ravenously consume.

When you phrase it like that, it’s really quite simple.

Site speed and efficiency are rarely talked about when discussing crawl budget. But they’re fundamental to creating a site that works for users and bots. For any speed-based initiative, you should also consider the impact it should have on bot content consumption.

Can you calculate your crawl budget?

You can. Very simply. If you have a verified domain property set up in Search Console, Google gives you some solid, albeit sampled data into the total number of crawl requests sent in its Crawl Stats Report. Just divide the number of crawl requests by the number of days and voila, you have a daily crawl budget.

And for big publishers, there are usually some opportunities to identify some obvious inefficiencies.

Underneath the main property, you get a breakdown of where Googlebot spends its time. Here you can see 52% of the bot’s resources are going on temporarily moved (302) files. That’s a very high number.

On closer inspection, we can see that these files are almost all JS and CSS files, none of which need to be moved temporarily. They’re static files that should exist on a single URL with a long-life caching policy—probably something like a year, often shown as 31536000 seconds.

This is a great opportunity to improve our site efficiency.

If you plan to update files like these regularly, you have a couple of options.

Versioning the file (e.g. script.v1.js, script.v2.js). This way, when the file changes, a new version with a unique URL ensures the browser fetches the latest file rather than serving the outdated cached version.

Use no-cache if the resource changes, but you still want to get some of the speed benefits of caching. The browser still caches a resource but checks with the server first to make sure the resource is still current.

But if the files are static, serve a long-life cache policy on the same URL and save that sweet, sweet crawl budget.

The purpose of this kind of work is to ensure that page updates and improvements are picked up in a timely and consistent manner. Something that a more efficient site can deliver.

It’s important to remember that SEO is largely a probability game. So when creating and prioritising projects, consider how likely you think the positive outcome will be. A high value, but improbably project may be less profitable than a low value, high probability one.

12 ways to improve your crawl budget

All of these are great places to start and come with benefits outside of just crawl budget improvements. Try to establish the areas that present the biggest issue for the user first.

Improve site and page performance

This is one of my favourite methods of improving crawl budgets because it’s so easy to prioritise. The benefits of a faster site are there to see for everyone. Crawl budget is a less tangible measure, but when your site loads faster, crawlers can navigate through each page and resource more efficiently.

For example, if it takes a bot an average of 10 seconds to crawl a page (or at least the first 15 MB of each page) and it allocates 10 minutes to your site, it can only crawl 60 pages. If you reduce the amount of time it takes for a bot to crawl a page to two seconds, it can crawl 300 pages in 10 minutes.

You should consider more than just the size of a page or your ability to load resources efficiently.

The number of resources loaded

How you load assets (asynchronous or lazy-loading for example)

Reduced server response time (make sure you’re using a reliable hosting service and minimise server-side delays by optimising database queries and compressing resources)

Efficient caching policies (typically the max-age directive of 31,536,000 seconds is used for static assets)

Minimising render-blocking resources

Using a CDN

Frankly, Core Web Vitals has been such a success (albeit an incredibly over-used one) because there are specific, real-world measures attributed to them—a rarity in SEO.

Using Google’s new CrUX Vis tool, you can see how real-world Chrome users experience your competitors on the web. If you’re significantly behind the competition, then it’s worth deeper investigation.

Build quality links

Link quality and velocity show that your site is doing something right. It’s how search engines have been able to establish you as a respected industry resource for two decades.

By quality, I don’t mean domain authority. I mean generating links from sites that are relevant to the content at hand, have an equally impressive backlink and traffic profile and are generating quality links at scale. A good quality backlink drives referral traffic to your site. Another excellent signal for crawlers.

Bots follow user behaviour. Anything important to users and the wider community will be prioritised because bots use links as pathways to find content. Pages and sites with a more prominent backlink profile receive a higher crawl priority.

It’s not easy to generate backlinks. Once you have a page that does, use that page as a linking hub. Enhancing your internal linking from that page to relevant, important pages will support their crawl prioritisation.

Increase your content production

I’ve been in many conversations where people argue that impact is more important than output. In many ways yes. No arguments from me. But in a news world dominated by Top Stories in search, I don’t know if I agree.

Yes, you need to be a ratified news publisher and build a trusted brand. But content velocity is huge. Google loves fresh content. It’s obsessed with it. As are all the major algorithms. Fetid beasts that consume more and more.

I’d liken it to Jabba the Hut or, for a nicher reference, Fat Albert. He probably wouldn’t be called Fat Albert anymore. Plus size Albert. Maybe even just Albert.

Regularly publishing fresh content signals to search engines you’re an active publisher. All things being equal, a site that creates 200 articles per day should be crawled twice as frequently as a site that creates 100 articles daily.

Now, I don’t advocate for the pointless publishing of content. It should still add value. My favourite way of describing this is to sweat your assets. Once you reach a certain publication volume, you as an SEO should be canny.

Publish quality videos as separate play pages

If you have images from an event or live blog, publish them as a gallery

Consider how a live blog can be cut into short, punchy separates from popular stories

Get access to your log file data

I love log file data. There, I’ve said it. Lock me up and throw away the key.

There is no better way to understand how crawlers access and view your site. Google’s Crawl Stats Report is useful at getting an overview of how often your site is crawled. But there’s no detail or depth involved and like everything Google gives us, it’s sampled.

With server logs, we can see which sections bots deem important and vice versa. You can make navigational and internal linking decisions based on the frequency of crawling at a page and subfolder level depending on the size of your site. If you get real-time access to log data, you can find prominent 404s and redirect chains by combining response code, log and page-level data.

It’s a nerdy treasure trove.

It’s how we find wasted crawl budget on areas like parameter URLs, broken and redirecting pages and the impact of adding pages into primary or secondary navigations.

This kind of data is both valuable and fascinating. It’s interesting to see reasonably high numbers of crawl requests to parameter pages that don’t generate clicks. It warrants investigation.

Internal linking

Internal linking is for users first and foremost. Your primary and secondary navigation, ‘More Stories’ blocks and text-based internal linking should be geared up for people. Google’s Quality Rater Guidelines reference the value of the main content and supplementary content of the page. You can read about their recent updates here.

The supplementary content includes menu items, internal linking blocks, the author etc and should ‘add value to the page.’ It shouldn’t be used to promote irrelevant commercial content.

But it’s worth considering what changes and improvements you make will have on crawler access. The number and quality of links into sections of your site will be mirrored by bot access. It’s your chance to shape how you are viewed by Google.

I suggest optimising menu items and blocks with sitewide implications. Then breaking sections of the site down, identifying your priority evergreen and tag pages help shape your site effectively. Linking to a couple of key tag pages in the first paragraph or two of each page promotes a strong site structure that will be mirrored by bot access.

Totally unproven, but I could see how your menu is linked to your topical authority. It’s your shop window. If you’re a political site that doesn’t prioritise primarily political pages, there’s a disconnect between who you think you are and how Google sees you.

304 - not modified response code

My favourite tip on this list. Not because it’s the best. But because it’s the most unique. The 304 response code indicates that there is no need to resubmit the requested resources. So once a crawler reaches the <head> of the page and reads the 304 response code, it won’t come back to recrawl the full page until this code is changed.

It gives you control over your crawl budget without forcing you to delete content. For any of you who work in huge publications and newspapers and want to keep your archived content, this is gold dust.

The easiest way to roll this out is to identify URL patterns of old and inconsequential pages from some time ago. Then speak to your tech team to establish how easy this would be.

It’s worth testing this to begin with to see if there’s any impact on pages linked to from these pages. I don’t think those links will be devalued, but it’s always testing these types of changes first.

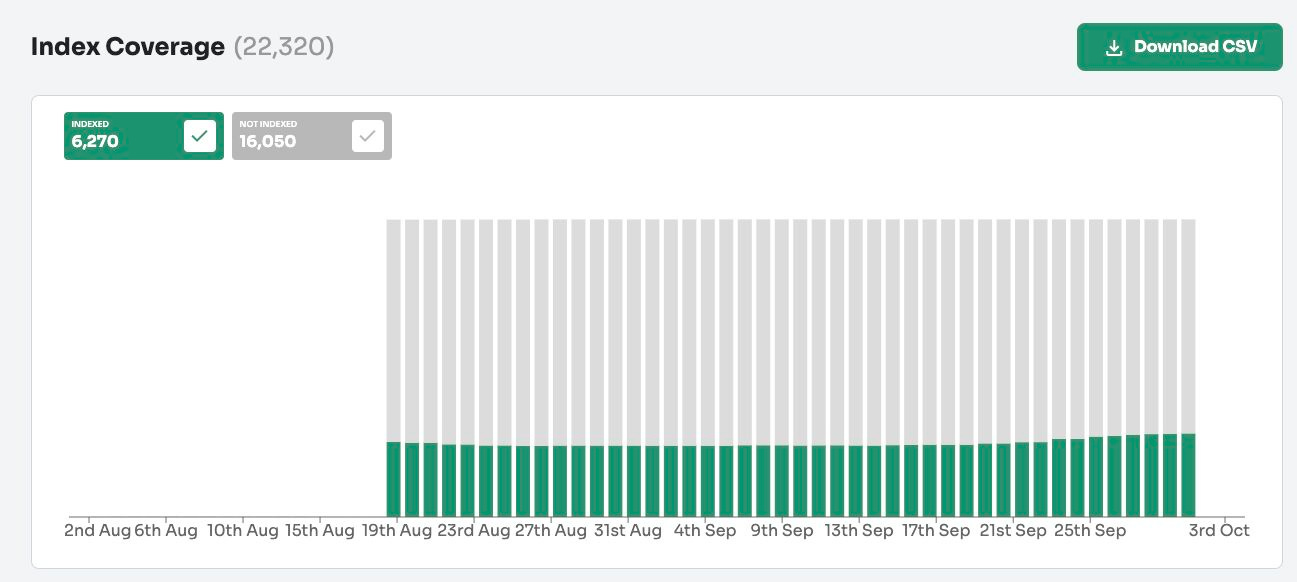

Review the page indexing report

Whilst the page indexing report has a 1,000-row limit - a particular hindrance for large site owners - it can throw up some incredibly useful nuggets of information. The most obvious wins tend to come when you identify pages at a subfolder or page template level that have obvious indexing issues.

The ‘crawled - currently not indexed’ row and ‘discovered - currently not indexed’ tend to be your best route to identifying indexing issues. It can signify linking or quality issues, robots.txt configuration problems or broader crawl budget concerns.

There are people like Adam Gent who are working on a page indexing report that removes the row limits. Something that will be extremely useful for big site owners. Whilst it’s not out yet, you can follow Adam’s progress at Indexing Insight. Highly recommended.

A reminder that pages can move backwards. Indexed pages can move into the ‘crawled - currently not indexed’ report and even intot he ‘URL is unkown to Google’ report.

Google are clearly working on trying to only keep content that serves a purpose in its index.

And the Crawl Stats Report

I have covered this above, so won’t go into much more detail. But the Crawl Stats Report gives a great indication of your crawl budget and where Googlebot is spending its resources. It takes a bit of getting used to if you’re new to it, but you can find some brilliant tidbits of information. Particularly for large sites.

Best utilise XML sitemaps

Do sitemaps improve the findability of your content and improve indexing? If you have a site with a strong backlink profile, reasonable internal linking configuration and content worth indexing, I wouldn’t say so.

However, sitemaps can be a really useful way to identify indexing issues of your own.

Identify discrepancies between submitted and indexed pages

See broken, redirecting or server response errors

Identify patterns in pages that end up in reports like ‘crawled - currently not indexed’

This is a little different for news publishers. Your Google News sitemap is undoubtedly a useful resource to help Google find time-conscious pages to use in Google News and Top Stories. News content is reliant on accuracy and concise, timely information. So to maximise your chances in the frenetic news ecosystem, a news sitemap with a title, URL and modified date is essential.

Minimise broken links and redirects

Simple and obvious right? When crawlers start diving deeper into your site, every broken link and redirect they interact with wastes resource.

As an SEO at a large publisher, I’m well aware of how difficult this is to manage. Cloning articles has been a newsy hack for years. Google prefers fresh URLs for news coverage and whilst this hack seems like a great way to drive some extra traffic, there’s no such thing as a free lunch. Redirect chain after redirect chain.

My advice is don’t strive for perfection but address the most concerning issues. Redirects and redirect chains with a high number of crawl requests and broken pages with significant traffic or quality backlinks.

Add a rule to your sitemaps that exclude broken and redirecting pages

Minimise redirect chains

Fix broken pages with a high number of requests and/or traffic

Ensure menu items don’t pass through redirects

Use your robots.txt effectively

Is the robots.txt file a good way of preventing Google from accessing areas of the site you don’t want it to?

Yes.

Can it support proper crawl budget management?

Yes.

Can it also be misused and cause major indexing and crawlability headaches down the line?

Wait for it…

Yes. I bet you didn’t see that coming. If sections of the site have been indexed that shouldn’t be or vice versa, it can be a real pig to sort out. A pig without any obvious upsides in lots of scenarios. So if you restrict access to any section, make sure you involve the relevant stakeholders.

If you’re looking to become the de facto robots.txt guy or gal, I’d suggest setting a process up whereby you are automatically notified when a change is about to occur. Blocking payment subdomains on paywalled sites is a no-brainer. Ditto martech confirmation content. But just be a little wary. Don’t use it to block crawling of individual pages.

Proper pagination implementation

I think pagination is unlikely to be a serious issue for almost any site if it’s implemented consistently. As long as you use self-referring canonicals (don’t canonicalise all pages to page 1, the pages are intrinsically different) and ensure that the pages are crawlable and controlled you’ll be fine.

Google has provided their own recommendations for pagination. The basic principles stand, even if they are a little e-commerce heavy. Sites like the NYT do this well. They load the first 9 pages of paginated content with unique, crawlable, indexable links. Then page 10 becomes an infinite scroll. It prevents Google from crawling to page 3,000 and maintains a flow of link equity and a show of topical authority around certain topics.

Worth mentioning here that Google doesn’t follow a button or ‘follow’ any actions. So if you use infinite scroll Javascript or a Load More button, Google is unlikely to see articles that exist beyong that point. So any story that falls past ‘page 1’ will no longer be crawled via your paginated pages.

That’s all folks

A long, but hopefully a goody. As always, any questions or thoughts let me know. If you’re looking for some further reading, Barry Adams has two very good articles that are worth further reading;

Great stuff. I've landed in your newsletter and read 5 articles straight. Nice job! Keep it up.

Superb article, Harry. I'm also fully with you on navigation & topic authority, I think a site's top nav links signpost the site's editorial specialities so need to be carefully considered. Too many publishers give too little thought to their top navigation.