Why updates to Google's Page Quality Rater Guidelines matter for Publishers

Google has made some pertinent and possibly poignant changes to its Quality Rater Guidelines. Here's why as you should care as a publisher.

I love the Quality Rater Guidelines. There, I’ve said it. Lock me up and throw away the key.

They are the closest we will get to understanding how Google Quality Raters (real people I might add) are instructed to view and analyse websites.

But my god, are they drab. All the style and panache of a new dad trying to break in his orthopaedic trainers. Yes, we know your feet hurt, but is it really worth it?

They truly are abysmally formatted. By one of the world’s richest tech companies I might add.

A shame. But maybe they didn’t think there’d be a community of nerds that would analyse what they do to the nth degree. Still, high standards and all that. I’d like to think I wouldn’t let this this the light of day.

At a company I once worked for, I was told;

“ We don’t feedback. We feed forward.”

It runs through my head at least once a week. I hate it.

TL;DR

You must demonstrate credibility and place a stronger emphasis on publisher and creator reputation to achieve a highest rating

The updated guidelines emphasise identifying low-value, mass-produced content and untrustworthy pages.

Particularly if it’s sole purpose is to make money

Google has introduced more examples of Experience vs. Expertise, especially for content where personal experience is valued (hint hint, affiliates)

You must, must, must make content that has a level of originality and uniqueness. You have to stand out.

What are the Quality Rater Guidelines?

These guidelines serve as the official handbook for Google’s Quality Raters. Real, third-party people follow these guidelines to score search results. Their evaluations help train Google’s AI models and inform algorithm updates

The quality of web pages, primarily through Page Quality (PQ) and Needs Met (NM) ratings is assessed, shaping how Google refines its ranking criteria. I’d go as far as saying it is crucial that SEOs and publishers understand how their content is judged. Pre-algorithm I might add.

But I would say that. I’ve spent days analysing updates and writing this article. You be the judge.

What does it cover?

A lot in fairness;

How Google understands and ranks pages (page purpose, E-E-A-T, main vs supplementary content, user intent and website and content creator reputation)

How different types of content are ranked (news, informational, creative and YMYL)

What qualifies a page’s quality rating (from lowest to highest, with clear examples)

The Needs Met scale

AI generated content (scaled content abuse)

Why should you care?

If the above hasn’t convinced you then let me take you on a trip through (recent) memory lane.

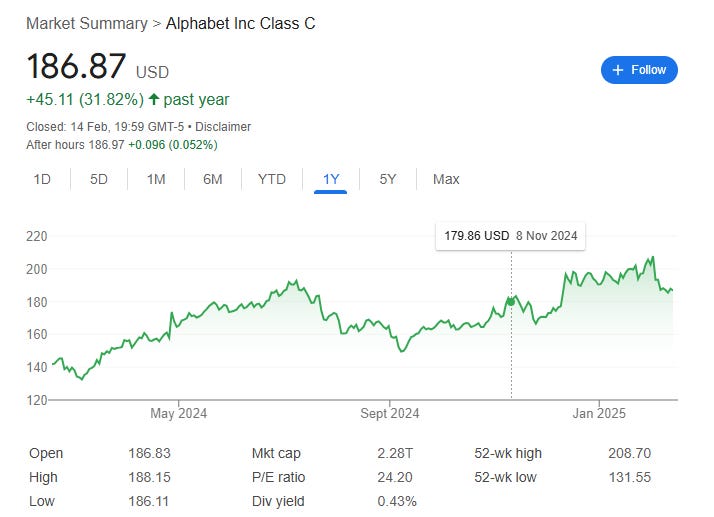

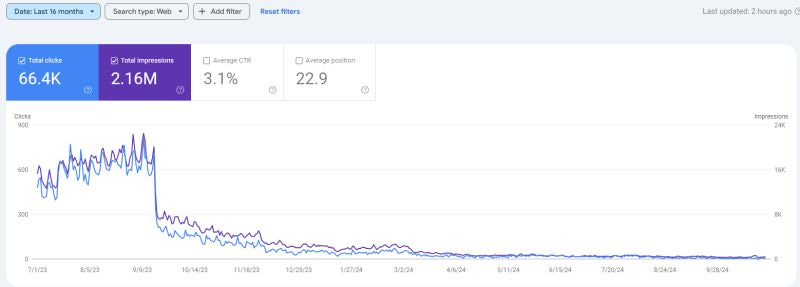

The last few years will live long in the memory of SEOs. Particularly publisher SEOs. The 2023 Helpful Content Update - one I’d like to lovingly rename the Helpful Content My Arse Update.

Yes, I did have a site destroyed by it and no I’m not bitter.

The Site Reputation Abuse scandal. Sorry, update. This took up far too much of our time this year. And whilst I broadly agree with Google’s assessment that their SERPs were full of thin, affiliated, duplicated shit. They would do well to remember that they built this city…

“They built this city on links, authority and engagement signals. “

Doesn’t quite have the same ring to it.

In January of last year, there was a really interesting piece of longitudinal analysis done by German university researchers titled ‘Is Google Getting Worse.’

If you don’t want to read the full PDF, yes. Yes, it is.

It examined the prevalence of SEO spam (not my words), scaled content and affiliate marketing abuse in Google, Bing, and DuckDuckGo search results over a year.

In summary;

The study analysed 7,392 product review queries and found the majority of high-ranking product reviews contained affiliate marketing links

These high-ranking pages were more repetitive, list-like, and contained shallow, templated content rather than in-depth analysis

While Google, Bing, and DuckDuckGo attempt to counter spam and scaled content abuse, it returns quickly after ranking adjustments

Authentic review sites are being pushed down in rankings, while poor-average quality affiliate pages on more powerful domains dominate

Google’s foundations were crumbling. They still are, to some extent. They seemingly cannot crack down on thin, repetitive or scaled content, and the search experience is overrun by it. Well, that and intrusive ads, AIOs and shopping features.

Not exactly a one way problem is it.

The over-optimisation of content of poor quality content has been an issue for a long time. The Helpful Content Update was designed to deal with this but failed.

In my opinion, it stripped away a huge amount of creativity and entrepreneurship that Google had once afforded. Their handling of it was so poor they couldn’t risk taking the same algorithmic approach to Parasite SEO. Which was, IMO, a much more serious issue.

So, the better you understand how Google really audits pages and how third parties are trained to spot quality, the more value you can add.

What’s changed?

Google updated the Needs Met ratings to better distinguish between truly helpful content and SEO-driven affiliate spam.

E-E-A-T

The 2025 guidelines better reflect the importance of first hand experience and assessing different types of expertise dictate a page’s quality score.

Does the author show they have used the product(s) in question?

Have they contributed to multiple different articles?

Have they featured on podcasts? Are they a prominent figure in the industry?

Do they need to be?

There are lots of content types where personal experience is immensely valuable. E-E-A-T doesn't exist so you can plaster an author bio over every page and move on. It’s about developing an appropriate level of quality for different types of content that best serves the user and their needs.

Travel blogs are a great example. I don’t need to know that someone who’s been to a village in Thailand has a fucking degree in chemical engineering. Or has written for the NYT for years.

I just want to see that they have drunk a bucket of ambiguous alcohol and over-caffeinated red bull on a now grim looking peninsula.

It’s important to remember that Google’s algorithm isn’t static. Not all things are created equal. In areas where real expertise and first hand experience are crucial to serving the user with the best result (think YMYL style content), E-E-A-T attributes are dialled up.

Reddit has had an extraordinary rise in recent times. But I’d wager it still doesn’t rank well for financial advice or health related issues. It’s not that Reddit doesn’t have a ton of potentially usedul content about pensions or disease. It’s that Google’s algorithm(s) are weighted differently for different query types.

Authorship

The below section doesn’t exist in the previous version of the guidelines.

You’re telling me I can’t just fabricate an author and their experience in a field and expect to rank well anymore?

It’s political correctness gone mad.

Call me a fantasist, but I suspect this had something to do with all the third party publishers post the SRA. Changing the author’s job titles and pretending they worked for the ‘parasite’ host. Just a bit of harmless black hat SEO-ing. You can read about that here:

There’s also more of a focus on transparency and website accountability. In the new guidelines, section 4.5.3 is titled Deceptive Page Purpose, Deceptive Information about the Website, Deceptive Design. There was no mention of ‘deceptive information about the website’ in its prior incarnation.

Google has specified these definitions below, going so far as to say;

All pages or websites using deception of any kind should be marked lowest.

Minor tweaks to copy and definitions but poignant ish I think. At one of the publisher roundtables Danny Sullivan hosts, he was very explicit about how Google deciphers quality. Post the rollout of the SRA he said something like;

We take what you say on your website at face value. We read everything.

Not verbatim, but you get the idea. Anything outward facing that is in any way contradictory or, perish the thought, a bare faced lie will have consequences.

Now, say what you like about Danny Sullivan… That’s it. Say what you like about him.

After Mark Williams Cook’s work on how Google categorises keywords and consensus scoring, we know that anything you publish that doesn’t agree with the general/scientific consensus will struggle to rank. Anything deceptive or misleading will have consequences.

Needs Met scale adjustments

Google’s Needs Met scale is there to determine how effectively a page satisfies a user’s query. It’s a great way of analysing content and establishing whether it hits the mark for your users.

It reads like so:

The most recent updates have seen Google simplify definitions and clarify that Fully Meets can only apply when a single, clear result can satisfy all users. I suppose to account for differences in opinion and the complexities associated with most queries.

All other categories have been given clearer interpretations. They’ve clearly made an effort to be less ambiguous post Site Reputation Abuse update.

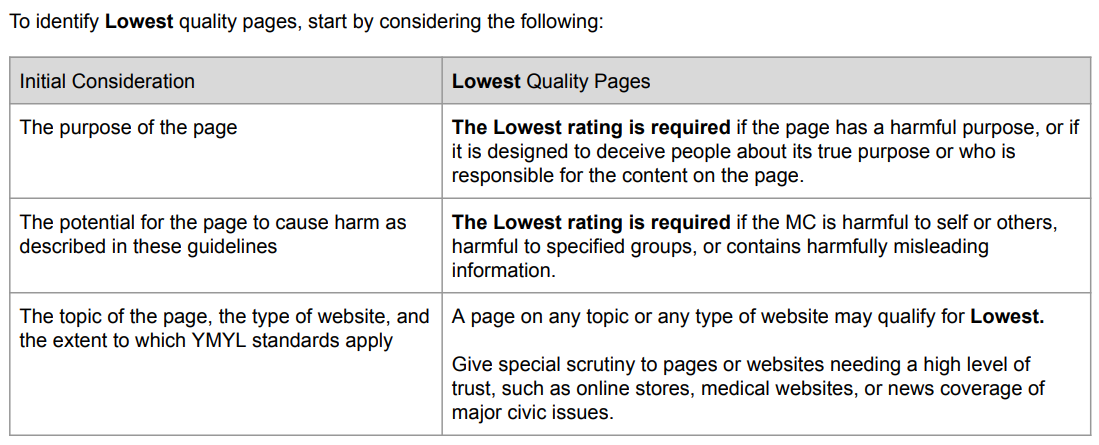

Changes in page quality ratings

Page quality is scored on a scale from lowest (1) to highest (5). This hasn’t changed. Why would it? But to borrow an expression from my late and let’s say agricultural father, they have ‘tinkered’ with a couple of things.

The Lowest and Low-Quality Page sections have been updated to better align with Google’s Search Web Spam Policies, making it clearer when a page should receive a low rating.

Now, comparing that to the 2025 version.

This is a clearly pointed reference to third parties and authoritative domains benefiting from Parasite SEO. Of which I admit we were one. Along with every publisher.

And Google haven’t stopped there. The updated guidelines around low quality content has two new distinct subheadings;

The main content is created with low effort and has low added value

Filler as a poor user experience

This goes beyond just websites who scrape content. It’s about originality and uniqueness. Expertise. It’s easy to create a page with the same structure as those in positions one or two. It’s not easy to be a true expert on the topic at hand.

And do you know what experts have? Unique knowledge. Opinions built on years of expertise. They can bring you something no one else can. That’s the foundation of creating content worth indexing.

*Background noise* Recipe pages *more background noise*.

Recipe bloggers have got so used to providing us with their life story so you have to scroll 19 times to get a recipe for sausage pasta. Like with Forbes et al, you can’t blame people for doing it if it works.

So, the structure of your page really matters. Give people what they want quickly and efficiently. Don’t make people work for it.

The Highest and High-Quality sections have barely changed. You could argue there is more focus on E-E-A-T and reputation as hallmarks of quality. Elements that are harder to fake. I imagine to combat the overwhelming amount of scaled content abuse and low value / AI-generated content.

Spam updates and AI-generated content

The below is a completely new section in the 2025 guidelines. For the life of me I can’t think why they would need to add this in…

I think AI can supercharge your content efforts. But Google’s algorithm continues to reward content volume and signals en masse. Therein lies the problem.

There have been many sites who fell foul of the SRA who have simply scraped that content, launched in on another domain and will repeat the process until hell has frozen over or Google actually sort the sodding issue.

Don’t hate the player, hate the game. Or the greedy algorithm.

Rinse and repeat for this section on expired domain abuse. Also not something that was mentioned in the last version. It works. That’s the problem. I suspect that Google engineers are trying to point the algorithm in the right direction. But there’s constant over and under steer. Too far one way and a correction that means we never actually point at the target.

There’s probably a good war analogy someone with historical knowledge could find here. But you’re stuck with me. More of a fart joke kind of guy.

Still funny.

If you continue to partake in expired domain abuse you’re being actively discouraged. You’ve been warned…

Ad guidelines and UX considerations

The QRG has a headline titled Ad blocking extensions. The second paragraph beginning with important has been added in the updated version. Google wants its raters to see the full ad experience.

This is important for two reasons. Well, two I can think of;

As more and more websites start using paywalls, it’s easy for us as SEOs to forget about the unpaywalled experience.

As yields drop and search traffic dwindles, commercial teams will try to hit targets by becoming more aggressive with ad units

As someone who works on a paywalled site, I’ve been part of both. It’s crucial to view the ad experience for non-subscribers as your website will have different rules for different types of content. So speak to your commercial teams and try to abide by some general advertising guidelines.

Don’t compromise the quality of the page. The short-term gain will never be worth it.

You can read more about ad best practices from the Coalition for Better Ads and Google Adsense Guidelines. Usually, something like 3 - 5 ads per 1000 words is industry best practice.

What does it mean for news and publisher SEOs?

Elements like trust and reputation signals, and fresh, accurate content are non-negotiables. They have been for some time. Dialling these signals up or down, depending on the type of content, can make all the difference.

Now, there’s no excuse not to really consider what your users (and Google) expect from each type of content. News is driven by content volumes and source reputation, whilst evergreen is driven by engagement signals. They are different worlds.

You have to understand both.

Understand not all content is equal

Big publishers still have a huge advantage. The content volume and perceived topical authority of certain sites mean we can rank for a wide variety of complex topics. You need to understand the nuance between news, sport, financial topics, and affiliate content et al.

It’s not easy.

You aren’t working on a single category niche site. You need to understand what qualifies as quality content across different categories. Maybe more importantly, you need to help your product and editorial teams understand why this is true, too.

News

The last five years have seen huge changes for the majority of publishers. International sites have risen up the ranks in Top Stories and Google News, political topics have had much more stringent ranking criteria applied and Google Discover has become even more volatile.

It’s not easy. But the QRG does give some indication as to why.

News publishers must double down on trust and originality. Investigative journalism, fact-checking, and expert-authored content will be prioritised over low-quality aggregators. You must maximise your trust and expertise at the source and page level. Editorial policies, awards, bylines, and author structured data are no longer nice-to-haves.

I’ve written at length about how Google really ranks news sites. The algorithm assigns a source rank to each article based on prior performance and source reputation.

YMYL and Affiliate content

Affiliate and YMYL will need higher content quality. After the last few months, you shouldn’t need me to tell you that.

Google is actively demoting thin, low-value-add, and sales-driven content. Your content has to add value. It must have unique elements. Elements that only someone with genuine expertise on a topic could know.

The argument for using experts and author bylines is not to placate Google. It’s about creating the best quality content for your users. If we’re creating a page reviewing the best coffee machines, is our author’s first hand experience important?

Of course it is. It’s the foundation of the page.

But is it more important than the experience someone with years of expertise has? That’s a question for an individual user. It’s different, but it adds value. It improves the page.

Maybe I’ve got my tin foil hat on here, but I don’t think it’s unreasonable to suggest that Google and its Quality Raters aren’t huge affiliate fans. It’s not that hard to see why.

But in a 185 page, 60,000 word plus document ‘affiliate’ is mentioned five times. Five. Given there are entire sections on site reputation abuse, this feels a little underwhelming to me.

For context ‘news’ is mentioned 124 times. ‘YMYL’ 123.

Reputation. Reputation. Reputation.

For all Google’s faults in recent times, they are trying to combat misinformation. Not very well, but they’re trying.

AI spam

Scaled content abuse

Site reputation abuse

Fake authorship

Generally, sites that abuse can be incredibly successful for six months. Maybe 12. Maybe even longer. Eventually, it catches up to them. So, playing the long game and not cutting corners is the route you have to take. Particularly if you work for a bigger brand.

So make sure the efforts the content creators go to are replicated on the page and at a brand level. Shout about the work you do, because no one will do it for you.

Accuracy matters. You have to be a trusted source to rank for rich features. So if you employ some kind of fact checking ability, then make sure you shout about it on your site. It will have a positive impact.

Main content vs supplementary content

I’ve always felt this is a really good way of breaking down a page and the purpose (and value) of each section. Think of the main content of each page as its primary purpose. If you’re feeling nerdy, I’d liken it to the DOM or semantic HTML. It’s primarily text, tools and multimedia, but it could also include reviews and third-party content if that was essential to the page itself.

Considering the role supplementary content and advertising play on the UX, this matters. The below from our site is a nice example of why supplementary content matters. Or rather, how it can detract value from a page.

You’ve always been told that links matter. And they still do. But rarely does doing something at scale have the impact you think it will. And these links are never clicked. Which, to m,e is the sign of a good link, Internal or external.

But commercial links placed at the bottom of each article to try and bolster your commercial content aren’t appropriate. These links have no positive context, and this form of supplementary content detracts from the main content. In my opinion, at least.

As Google continues to erode a publisher’s ability to generate clicks, commercial teams will increase ad units to redress the revenue balance. This is almost always a mistake. The short-term revenue benefits will likely be outweighed by the long-term cost to UX and brand affinity.

So, be careful.

Hey Harry, I've a couple of questions I'd like to hear a little more from you.

1) "So speak to your commercial teams and try to abide by some general advertising guidelines. Don’t compromise the quality of the page. The short-term gain will never be worth it."

How would you set the conversation between you, the commercial team, and the HiPPOs (referring to hippos as described here: https://www.kaushik.net/avinash/seven-steps-to-creating-a-data-driven-decision-making-culture/) to persuade them to stop looking at the next mile only? Maybe it's just me but I've often seen the "Google may scold us" argument percieved as week and somehow lazy over time in discussions.

2) "Investigative journalism, fact-checking, and expert-authored content will be prioritised over low-quality aggregators". Close to the first question, how much time do you think is needed to see the ROI of these activities? Not saying a whole new approach to content publishing, even an MVP of investigative journalism =D.

TY!!!!