How conceptual models can help you (easily) create better content

Mark Williams-Cook's Google data exploit can help you create content that satisfies user intent in a way you've probably never imagined

Towards the back end of last year, Mark Williams-Cook and the team at Candour uncovered one of the more thought provoking tidbits in recent times. Hidden within 2TB of data, 90 million search queries, and 2,000 properties lies a glimpse into Google’s thought process.

If you have 30 minutes and prefer the visual medium over the written word (CHARLATAN), this video from Search Norwich is absolutely worth a watch.

It will either confirm or refute several things you thought you knew about SEO.

TL;DR

Google assigns site quality scores (from 0 to 1) to every domain and subdomain

Search queries are categorised into eight query types based on intent

Google determines reliability through a consensus score, ranking content that aligns with widely accepted truths higher (the opposite is also true)

Titles affect rankings via predicted click probability, even if CTR isn’t a direct ranking factor

Google uses a newsiness score for queries to determine how newsy it is

What are these eight types of queries?

It turns out that Google doesn’t treat all queries the same. Instead, it categorises them into eight Refined Query Semantic Classes (RQ).

As a human with access to an LLM that consumes up to a litre of liquid for every five queries, I thought I’d test the waters. So these definitions are provided by my ludicrously thirsty robot friend.

Short Facts

Quick information retrieval, often factual.

You would hope wouldn’t you, that a short fact was often factual. I love ChatGPT like the son I never want, but its condescending tone and inability to have a bit of fun really grates on me.

Sorry, where were we? Ah yes. These are queries where the user seeks a concise, direct answer to a specific fact-based question. These often align with "featured snippets" or knowledge panels in search results.

If you need an example of a short fact;

Q: Who is the Prime Minister of the UK?

A: Margaret Thatcher

Often factual indeed.

Comparison queries

Where users want to compare entities, products or concepts designed to help with decision-making or evaluation of differences and similarities.

‘Versus’ queries have always played a key role in the customer journey. Particularly when comparing high-value products.

E.g. iPhone whatever number we’re on vs the Samsung Galaxy Who Cares.

Consequence

Queries where users seek to understand the effects or outcomes of a specific action, decision, or event. People trying to predict how stupid their decision is or was and how disastrous the outcome will be.

E.g. What happens if I drink too much coffee

It’s like the seven stages of grief in that it starts with the jitters and you end up shitting yourself.

Reason

These queries ask for explanations about why something happens or the underlying causes of an event or phenomenon. Knowledge-hungry humans trying to figure out the meaning of life. Or how to stop doomscrolling. Truly an impressive race.

E.g. Why is the sky blue?

Definition

Queries focused on understanding the meaning of a term, concept, or phrase. These can range from fairly simple concepts to really quite nuanced phrasing, which makes ‘definition’ an interesting topic.

E.g. What is a blockchain?

A blockchain is where stupid people go to tell their peers they are also stupid.

Instruction

Queries where users are looking for step-by-step guidance or how-to information to accomplish a task. Learning how to perform a task.

E.g. how do I get rich?

Now that is a nuanced instruction. But having a willingness to lie, cheat, steal and target both the physically and mentally infirm will stand you in good stead.

Boolean

These are direct yes-or-no questions or queries with a binary outcome. Straightforward decision-making and validation

E.g. is it raining today?

In the UK in January? Yes. Or it’s freezing. Or better still, both.

Short-fact queries make up a significant chunk of Google’s traffic. These are unquestionably the terms that are at huge risk from AIOs. For two main reasons - they’re easy to answer in the SERPs and, crucially, keeping users on Google properties increases Google’s chances to make more money.

And if we’ve learnt anything from the last 2-3 years, it’s that Google is really, really, really interested in money.

Other

Queries that don't fit neatly into the above categories - often vague, conversational, or exploratory. These may include navigational or ambiguous queries.

I believe some local queries, reviews and other less clearly defined queries are bucketed into this group. I did see Mark describe where local queries fit in, but I can’t find the Linkedin message, so you’ll have to take my ill-educated word on it.

Why is all of this important?

It’s important because now you know how you should answer queries. If you know a query is a ‘definition,’ then you know the type of content Google and (shock horror) the user is expecting.

It doesn’t work too differently from a consensus score. Google has enough user data to know exactly what the answer should look like. A preferred format if you will. If the query should return an instructional response, then a comparison or definition isn’t as appropriate and will serve the user less effectively.

Since writing this Mark has released his own Refined Query Semantic Class Classifier using nearly 5 million queries to test and refine it.

And the always brilliant Dan Petrovic has made query intent classifier based on the above. it’s not trained on anywhere near as many keywords, but still very useful.

Now you can brief new articles and updates with impeccably clear instructions based on Google’s sandbox environment.

SEO can have a huge impact on your ability to understand and build a brand.

Site quality score

Google also assigns a site quality score to every domain (and interestingly subdomain), ranging from 0 to 1. This score determines a website’s eligibility for enhanced search features such as featured snippets, People Also Ask (PAA), and other rich results.

Sites scoring below 0.4 are excluded from these features, no matter how well they optimise their content. Google’s evaluation of websites relies on;

Brand mentions in anchor text

Branded search volume

User search behaviour

Click-through patterns when the website appears in search results.

If your brand or page outperforms its position and expected CTR, that’s a great sign. It’s a show of trust from users that will be reciprocated by everyone’s favourite search despot.

This is almost certainly how eligibility for Top Stories works. Until you reach a certain criteria you are not going to be present. And I am sure this consensus score changed pre COVID, where a number of big publishers could no longer rank for certain topics.

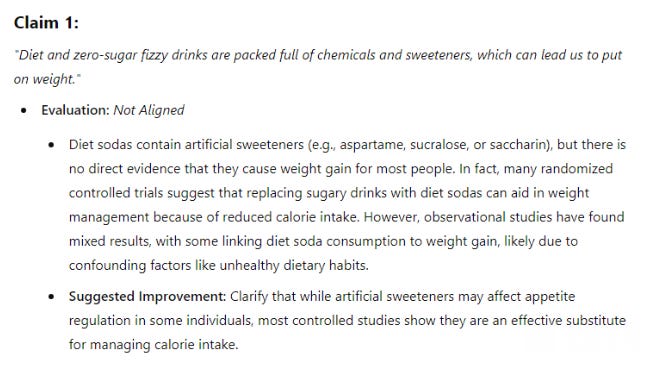

Consensus score

Google’s algorithm assesses how well your content agrees with widely accepted views. This consensus score influences rankings, particularly for certain types of queries in YMYL topics. Areas that have real-life consequences for people.

Debunking Queries: If someone searches for something obviously disprovable like ‘aliens built the pyramids,’ Google prioritises results supporting the consensus opinion. Contradictory or neutral content is unlikely to rank.

Subjective Queries: For politically charged or opinionated topics, Google intentionally mixes consensus, neutral, and non-consensus content to offer diverse perspectives.

Or just ranks four versions of the same BBC article…

I’m joking. Sort of.

If your content aligns with the majority consensus on certain topics, it’s more likely to rank. However, Google balances the playing field for subjective topics, which might explain why excellent content sometimes struggles to rank.

Steve Toth of SEO Notebook created a truly brilliant fact-checking Custom GPT designed to do just that. Fact check each claim in your article and return how aligned you are with the evidence.

This is one of the most impressive GPTs I’ve seen. For any page update, you can check the article's factuality. Imagine rolling this out at scale.

For every page update or assessment, you can help the author review the validity of their arguments at scale. You can even check for E-E-A-T at an accuracy level. Particularly useful for articles that cite multiple and diverse sources.

So how can I use this?

At a page briefing, update and E-E-A-T levels.

Briefing better articles

Briefing an article can require treading quite a fine line, so this may not be for everyone. You may need to manage egos, ensuring that questions people are interested in are answered clearly and succinctly.

We’ve spent years trying to reduce the length of introductory guff and get writers to cite their sources. It’s a tough gig.

Once you’ve finished the keyword research, you can run the queries through the aforementioned Mark’s aforementioned keyword classifier. Then bucket each term into one of the eight categories - short fact or boolean, instruction, definition etc.

Now you’ll have pages that answer questions in a surprisingly coherent manner.

Improving page updates

Let’s say you missed the chance to brief the article at inception and a writer went off a tangent. Never I hear you cry. Not in the newspaper world.

I know, I know. But trust me, it happens.

You can now review the article and reflect on how they answered each query.

Where does the article rank for its core term?

What about for the secondary and tertiary terms?

Does the page target the right keywords?

Have they clearly defined a definition or provided step-by-step guidance for instructional terms?

If you can see an issue, rebrief the article with an updated list of queries with guidance on the expected format for each

Ensuring factual accuracy

And if you’ve got the chops, you can check the validity of the writer’s claims. If you have a lot of more functional evergreen content that’s underperforming, particularly in health or financially related topics, it is worth pursuing.

Identify quality pages you think are underperforming and use Steve’s Custom GPT to check the factual accuracy of claims made. If there are inaccuracies made, I would raise it as an SEO issue with the author.

If they’re aware that the claim is either slightly off or a bare-faced lie, raise it. If the author’s aware and wants to plough on with their flat earth or jet fuel/steel beam-related nonsense, you have, IMO, done your job.

And that’s all we can do.

That’s it!

It’s all on the writers now. We can brief content with a level of factual accuracy and clarity like never before.

If you use this let me know how it went in the comments.