Understanding AI Overviews: a Patent-by-Patent breakdown

Analysing three patents so we can see how AIOs work and helping you figure out what to do about them (and AI Mode)

Deep search.

It’s bloody here ladies and g’s. The rushed, trillion dollar development no one wanted.

Predictive. Personalised. Conversational. They must’ve seen the success of voice search and thought we want some of that.

User: ‘Hey Siri, what’s the weather like today?’

Siri: ‘Heather is an abundant plant in heathland, moors and bogs in the UK.’

Angry, bitter user: ‘No, weather. What’s the weather like today?

Siri: ‘Elvis was an American singer and actor born in Tupelo, Mississippi.’

Fucking robots. I’d have better luck hanging some seaweed in my house or touching the back of a pig or whatever the hell else people did to check the weather before phones.

TL;DR

When a user searches, a query fan out approach is used, the results are grounded using a RAG model and finally personalised

The query fan out model is crucial. We need to create content that directly answers the next question(s) in the sequence effectively

In an approach akin to the British Empire, AIOs have entered over 200 countries and 40 languages worldwide

In the US, Google is the market leader in generative AI patent filings. It has grown 56% between 2024-2025

How do AI Overviews work?

Google’s AIO’s are powered by Google's Gemini large language model (LLM). They provide AI-generated summaries directly within Google Search results, often appearing at the top of the page.

LLMs are word predictors. They’re trained on vast datasets and aim to predict the most probable and coherent text based on the input query.

Now, the more recent models have an element of reasoning. Like a spotty child, they have been taught to “think before you speak.” They use a combination of the below in an attempt to reason;

Consistency sampling

Reinforcement learning (when you hit a robot on the head with a stick)

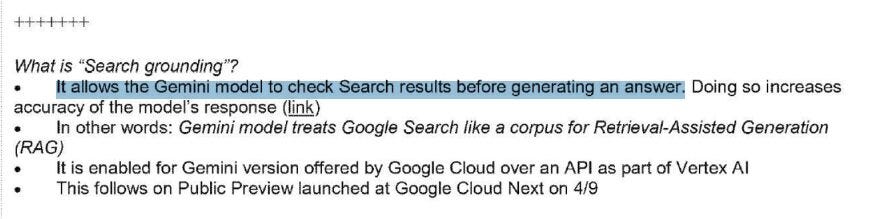

As a somewhat ‘sophisticated’ model, AIOs integrate with Google’s search index and employ RAG (Retrieval Augmented Generation) to actively retrieve and reference web results from Google’s more traditional algorithm.

Instead of just extracting a snippet (like a Featured Snippet, obviously), AI Overviews aim to provide a more comprehensive summary by synthesising information from multiple sources.

This is called grounding. A process designed to reduce hallucinations (hah!) by ensuring the model’s output is tied to real-world and (almost) real-time actual data. Not just pre-training data.

Navigating AI Mode for Publishers

This may well be one of the defining moments in your career. All of ours. Navigating it calmly and thoroughly will be a real feather in our cap(s).

Why do they hallucinate?

AI Overviews are powered by an LLM. They’re fallible. Based on Lily Ray’s research, probably a little too fallible.

Like any LLM, the probabilistic word tokenisation model - text is broken down into smaller units called tokens to help the model ‘understand’ text - makes mistakes. Particularly as there may be some kind of speed vs accuracy trade-off.

Convenience over all.

It tries to ground itself in sources and create plausible sounding text because there’s limited true reasoning involved. It recognises and predicts.

Which, in fairness, is not dissimilar to at least 75% of the population. Millenia of evolution. Opposable thumbs. All just to pick your nose.

Pointless.

To give some credence to Google’s little stop gap, AIOs are pulling data from the live web. And whilst they were trained on a dataset of unparalleled size, biases, inaccuracies or misnomers can and do influence the output.

(I do actually quite like them in lots of ways. But don’t tell anyone I said that).

Some searches are complicated. And as SEOs, I’m sure we can appreciate how much drivel is out there. I’ve spent enough time looking at beige waffle online to feel a certain level of sympathy.

Remember the ‘put glue on pizza’ and ‘eat one rock a day’ examples, where the stupid robot misinterpreted satirical content or a niche forum discussion as general advice.

Not just humans that misinterpret a bit of craic.

I should point out that AI is beginning to hallucinate more frequently. Ironically, their reasoning model is making them more fallible.

Thinking can lead to mistakes. Who knew?

How does the query fan out model help?

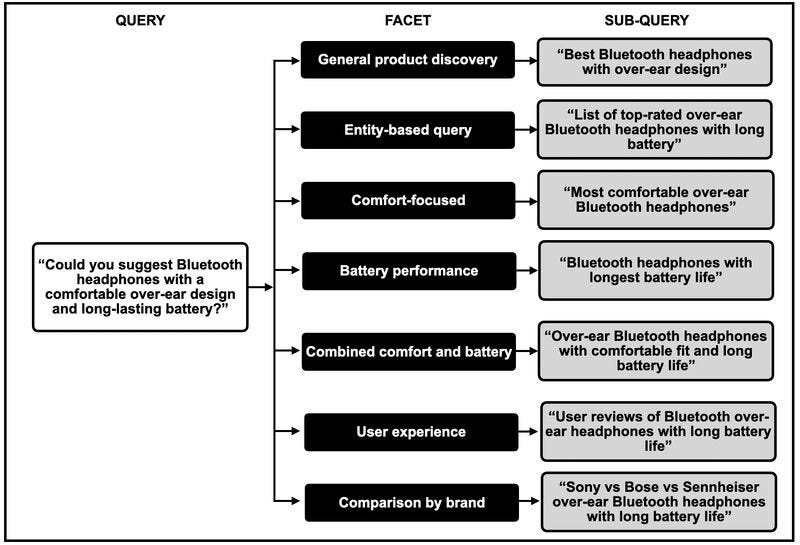

Query fanning falsifies thinking. It is supposed to address more nuanced queries by breaking the main query down into sub-queries or concepts.

The LLM can now generate simultaneous searches across Google’s bloated index for these sub queries. Not just the primary topic.

“We do the work, so you don’t have to…”

We have built AI in our image. Inaccurate and lazy.

It will no longer be about the query. Contextual information and topical authority will be more important than ever. Mike King’s overview of the patent that underpins AI Mode is essential reading.

But like every run of the mill writer, I digress.

By fanning out, the AI can;

Explore various related angles that a broad search will miss

Access a wider diversity of sources

Identify connections and relationships between the sub-searches

Enhance information retrieval with a more holistic summary. Minimise errors. Amplify convenience.

This is exactly why some sources in an AIO can be from pages 5, 6 or 7. By fanning out into sub-topics, the AIO accesses a variety of SERPs. Highly cited, relevant pages that are adjacent to the main query may provide something different. Something useful. Something sources from the main query do not.

Less technically. Throw enough shit. See what sticks.

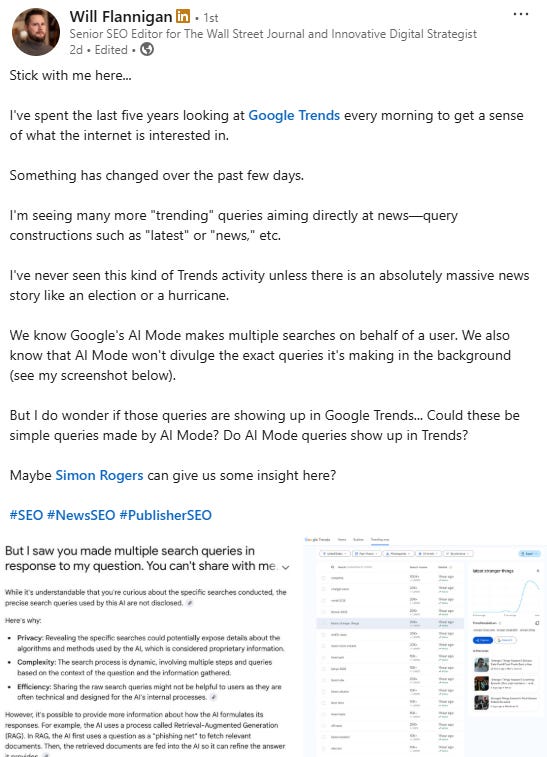

Some clever people have noticed that Google might be counting its own query fan out as an increased number of searches.

Naughty. Very naughty. Particularly as it could influence share prices… Allegedly I should add.

Why does this matter for SEOs?

If you hadn’t noticed, things are changing. Visibility is being touted as the new king. Things have been too easy for too long.

Awareness. Influence. These are secondary goals we need to set (obviously don’t lose sight of traffic and revenue for God’s sake).

Unfortunately, AIOs are so poorly cited right now that this seems to be our best option. With AI Mode on the near horizon, this might be a good opportunity to get to grips with its deformed little brother.

Anyway, here is a breakdown of three key patents that help us understand what AIOs mean for users and SEOs.

Worth saying we don’t know for sure that these patents are all crucial to AIO development. But there are some good rules of thumb;

If the patent is present in multiple countries

If it has been cited multiple times. Recently, ideally

If it has been repatented (I think I’ve made that word up, but you get the gist)

1. Generative summaries for search results

Generative summaries for search results helps large language models create more accurate and contextually relevant summaries. It broadens the search. Looking at more than just the initial search results gives the user a deeper understanding of the topic in a convenient, yet inaccurate format.

This is designed to make the summaries more reliable and reduce bullshit. By considering a wider range of information, the system can make fewer mistakes than a Geography student in Freshers’ Week.

Filing Date: March 20, 2023

Publication Date: September 26, 2023

Locations: USA only (four applications)

Cited: 31 times, 14 times this year

Main purpose: Enabling the integration of fresh, verifiable data from search results (grounding). Dynamic revision of summaries based on user interactions with search results.

2. Generating query variants using a trained generative model

Generating query variants using a trained generative model automatically generates variations of the original query during the search process. The query fan out technique removes the need for manual query resubmission by the user, personalising results based on user demographics and history.

By exploring different angles and phrasings of the initial query, the system can generate more complete and nuanced summaries. More trustworthy, less contradictory, blah, blah, blah.

Technically, faster and more comprehensive.

Not too dissimilar to the Consensus Score that Mark Williams’ Cook and team found.

Filing Date: April 29, 2017

Publication Date: May 30, 2023

Locations: USA, Korea, China, Japan, The European Patent Office and The World Intellectual Property Organization

Cited: 49 times, three times in 2025

Main purpose: The query fan out technique generates diverse query variants for a submitted user query, and increases efficiency by removing manual query resubmission by the user. Personalising results based on user demographics and history.

3. Generating an answer from a user’s history

Generating answers from a user’s history helps create personalised AI Overviews. By leveraging user data and email history, AIOs can speak to you as an individual. Citing sources you trust.

Initially, a query classification component determines whether the user's intent is to retrieve previously accessed information.

Once classified, the system applies various filters, including topic, time, source, device, sender, or location, to narrow the search scope.

The search is primarily conducted within the user's personal data repositories, such as browser history, cached web pages, or emails, rather than the entire public web.

Faster. Cheaper.

Accurately classifying query intent and applying specific filters elevates AIOs beyond fluffy summaries. We are moving towards hyper-personalised information delivery.

And the end of rank tracking.

Publication Date: January 30, 2025

Locations: USA (four times)

Cited: three times, none in 2025

Main purpose: Personalisation of summaries based on past interactions with the search interface. Hallucinaton mitigation. Summarisation of cached resources ensures the content is factually accurate to the user.

What does this all mean for AIOs?

These patents don’t operate in isolation. They are symbiotic. Helping us understand how and why specific summaries are chosen.

Patent one (Query Variants) synchronously analyses diverse but related searches, creating a richer, more diverse summary. Think of it like creating a more comprehensive article. Related topics matter.

The results from this richer search are then utilised by patent two (Generative Summaries) as additional content to ground the LLM's summary. Allegedly, ensuring the output is based on verifiable information and reducing hallucinations.

Finally, patent three (User History) creates a personalised summary. So the additional content reviewed and retrieved and the final AI summary are a) highly relevant and b) tailored to the user's past interactions.

Like a little dance between mating birds. The user inputs the query. Multiple query variants are searched synchronously to provide a more holistic answer(s) (query fan out). The results are then checked as to whether cached results can be served, then grounded.

The AIOs are then personalised and designed to integrate various rich media formats to answer queries. As Google would say, ‘to create the best experience.’

I imagine that accurate, rich structured data that supports Google’s (other LLM’s are available) ability to understand the context of these media formats will be of growing importance. Probably for AIOs and multiple versions of AI Mode.

So what should we do?

Well, let’s start with what’s happening?

Summarised results (the immediate decline of TOFU traffic. Maybe MOFU too.)

Personalised, unique SERPs

A move to a more conversational form of search

Rank tracking is on the way out

Visibility and awareness are on the up

Seven steps to rank in AIOs

Invest in your brand. Brand visibility. Citations, Links, Branded search volume. Branded products. Branded tools. Things that stand out.

More so than ever before, you have to understand your audience. You have to understand them well enough to know what topics add value and the subsequent questions they will ask (I would check out SparkToro here).

Answer their questions concisely. Cindy Krum called them fraggles a few years ago. Not too dissimilar to featured snippets. Short, concise answers.

Make your content highly clickable (titles, images, curiousity gaps et al)

Write great content. Solve people’s problems. Make it easy for robots to digest.

Then make sure you cover the topic in detail. With multiple content formats. Deeper and richer than anybody else.

Mark those formats up effectively with structured data

Visibility is more important than it once was. When zero click searches just occurred for queries that don’t warrant a click (“what time is it” being a prime example), it didn’t matter for brands. Now it does.

Significantly.

Whatever people will tell you, TOFU content can be incredibly helpful for brand awareness. A real and verifiable touchpoint.

For topics that matter as part of your user’s journey, AIOs can still add value. Solve their problems with quality content. Content that can’t be fully summarised by AI. Rich, insightful content.

Stand out. Google IO gave us a clue where this is heading and it isn’t looking super positive for publisher traffic. But you never know…

Build your brand. Personal and all. Diversify your revenue streams. Wean yourself off Google where possible.

I haven’t tried this yet, but Wordlift’s Andrea Volpini created a tool that simulates the query fan-out approach. It’s meant to predict the likely questions your audience will ask.

Further reading:

Measuring topical authority (I tried this last year. One caveat for news sites is that we don’t trade on evergreen content in the same way as other publishers. So this doesn’t tell the whole picture)

Publisher survival playbook from NewzDash

As always, leave a comment. Let me know what you think. Shoulder to cry on. Etc.

Really enjoy reading your thoughts Harry - particularly liked your Alexa example - that was exactly the type of conversation I have with it everyday - when are Amazon ever going to update it (surely a Claude4 integration is possible).

What's scary from an SEO perspective is that everyone is still getting started - these tools are still in their infancy (like the 'spotty child' you mention) and are only going to be getting better.

Wait until we get to the world (<!2mths) where we have an army of proactive agents at our disposal and they are all making multiple speculative queries proactively for us just in case we're looking for things to do at the weekend or holiday destinations, etc. That's when Google's search queries will shoot up again!